Wicked Problems in Information Systems and R&D

A main interest of mine for a number of years has been complex systems theory and “Wicked Problems” - systemic problems which are “unstructured, open-ended, multi-dimensional, and tending to change over time.” Examples of wicked problems can be seen in the human body (as disease), cultural or organizational systems (conflict, political failings, racism, the prisoner’s dilemma), ecological systems (climate change, mass extinction), financial systems (financial collapse, inequality, tragedy of the commons), and anywhere else there is complexity. Software and information projects are no stranger to complexity, and a major portion of their success or failure is determined by how they dance with wicked problems.

In this article I will outline a couple wicked problems, especially those in information systems and R&D, as well as some things to keep in mind to increase chances for survival. As we go it’s worth considering how many of these problems generalize to characterize other dynamics in life.

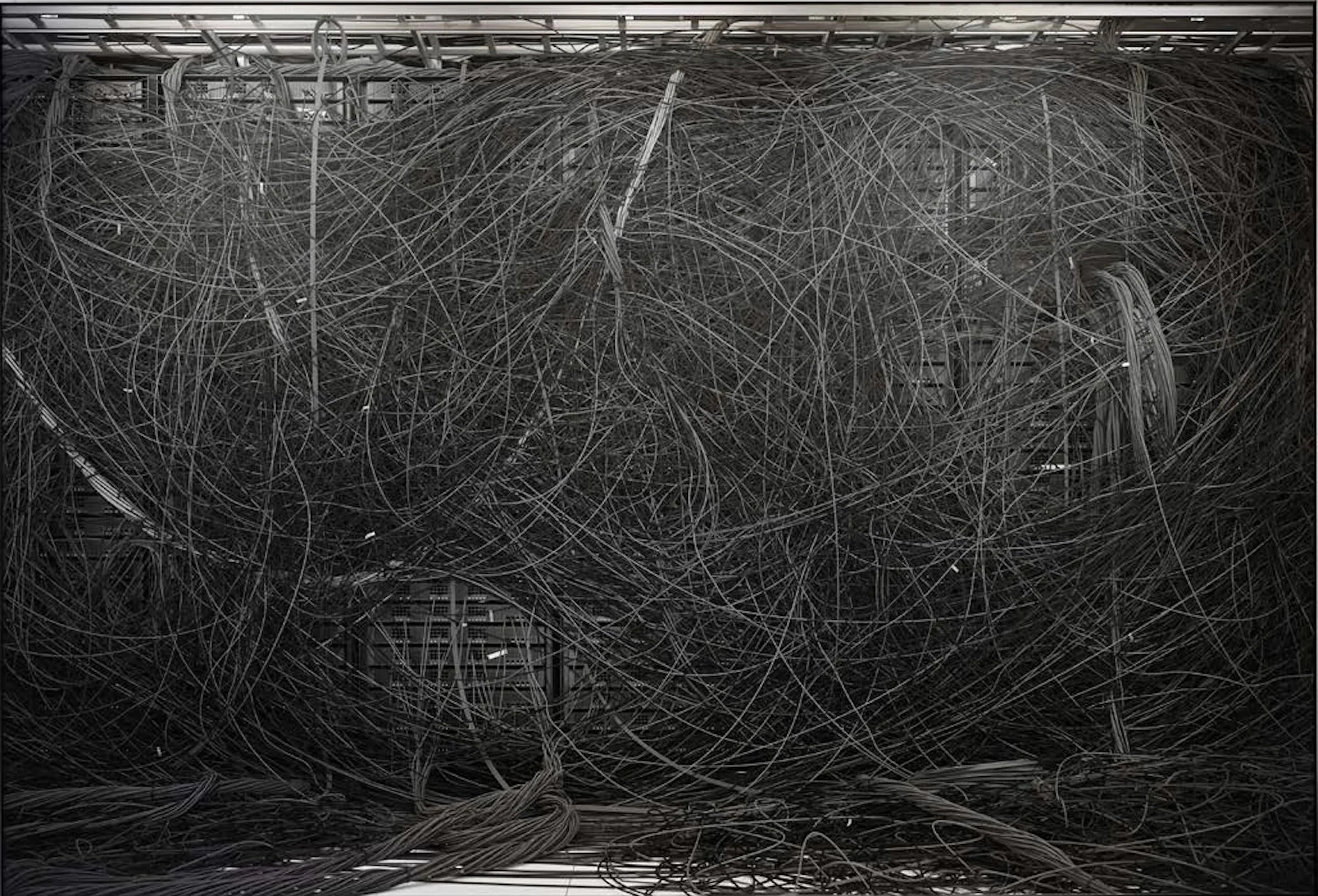

Dendrite II by Nicolas Baier

The Foundation Problem

There is a saying in systems architecture: “All the biggest mistakes are made on the first day.” In the first meeting, all the outcomes for the entire project have already been determined. Everything happening downstream depends on priors and preconceived notions, the scope and goals, who is involved, their flexibility and adaptability as individuals, and the context and incentives of the systems they exist inside. All of this happens before a single line of code is written.

“We believe this thing in particular is needed, therefore we will create this team. Everything that team ever does is stemming from this initial belief. Let us never speak of this again.”

I think of this as “the foundation problem”, like these things rest on the foundation of a house or building. As time (and money) passes, the role of this foundation only becomes more and more impacting on the shape of the structure built on top of it. Early, formative decisions and beliefs tend to compound over time. If there are cracks or imperfections in the foundation (there are!) those cracks create misalignments and complexity that become amplified across time. It is just not feasible to build a straight house on a skewed foundation, and every foundation is skewed in some way.

You might not think this has anything to do with information systems or R&D, but at the end of the day the value an information system creates is entirely dependent on its foundations and context. The reason we create information systems in the first place, and everything that happens thereafter, is because of the beliefs individuals or cultures hold about them and their demands from them.

To address the foundation problem we can do two things: deeply consider and verify beliefs (and their roots or foundations), and build adaptability and optionality into the system to be able to integrate and adapt to new information. This leads us to our next problem:

The Adaptation Problem

Everything in the world is always changing. Change is the only constant. Cultures are changing, organizations are changing, individuals are changing. The context that created your work and skillset is changing. Information slowly degrades in storage. Mountains are always eroding and moving, sometimes they even explode! It should come as no surprise when static systems break in their encounter with the endless dynamics of the real world.

It will always be cheaper and simpler to create a static system – for this reason static things will always be made, and we will always be fixing them. When a static system exists in a dynamic environment, there are endless, almost fractal ways for it to break at their interface (the legal system being a perfect example.) When static systems inevitably break, we depend on the adaptability and flexibility of individuals to step in and mend them. If nobody heals the wound, the system disintegrates. A system that creates static components must ultimately be adaptable in order to survive. This mending process becomes a key source of resilience, but also the source of evolution and revision.

A Kintsugi bowl from Korea — image from Wikipedia

In developing information systems, the implications are that when we define a system, we should also consider how it needs to change and evolve over time, embracing that change as opportunity. If a system is well modularized it can be sustained and even improved, and over time the systems that mend and adapt the best are the ones that stick around. In some ways SAAS is emblematic of this shift in thinking – we are gradually changing the way we think about software and information systems from being like static physical systems to dynamic, continuously updated, self-healing and evolving entities.

The Blurry Things Problem

It’s impossible to form a clear picture of something that is actually blurry. Some things are inherently indefinite. If you are walking towards the back of a train, are you moving forwards or backwards? If you say “Well that’s simple, just subtract the walking speed from the movement of the train” you’re forgetting that the earth is also spinning, orbiting around the sun, the solar system is moving through the galaxy, and whether or not the universe is moving (or if that question even makes sense.)

It’s a completely different question if we ask whether we are moving towards or away from the diner car. The way in which we frame things determines whether solutions are possible, or whether we’re in a never-ending nest of contradictions. When we set out to engineer something, we are always doing it with reference to some frame, and a key part of R&D is deciding what that frame is.

If we choose the wrong frame (or goal), no amount of effort or money will result in more definite results because the goal itself is blurry. If our goal is to “make a thing for people”, every person will interpret that goal differently, every person will make different things, they will make it for different people, and measure success in different ways. This might even be a good thing for exploratory phases, but becomes disastrous for coordination.

Likewise, when the problem itself is clearly defined and solvable but our information or feedback about it is blurry or indefinite, we will create “fuzzy” outcomes.

To get around this, a deep sense of clarity should exist around goals, and the purity of information and feedback should ideally show how close we are to achieving them.

The Technical Debt Problem

It’s generally always possible to trade reliability or adaptability for implementation speed. We could sit down at our computer and work for 24 hours a day, but we’d burn out pretty quickly and the quality of work would suffer. We could rush a project to completion to meet a quarterly deadline, but doing so might mean a team needs to rebuild that module every quarter. This is essentially like taking out a loan, eventually you have to repay it.

A photo I took after one of the many wildfires in California.

Another analogy to describe this is “putting out fires” vs “fireproofing” or “building fire breaks.” If all anyone does on a daily basis is put out fires in their immediate vicinity, solving short-term problems, everyone looks productive and heroic, the endeavor succeeds impressively for a time, then gradually slows, and then declines irreparably, often too late to save.

The time-scale that you make decisions on determines the ratio of “fire-fighting” to “fireproofing” that you should do. In other words, there’s a balance between short and long-term thinking. It’s not that we should never think short-term – when something breaks or is dangerous, we should be able to act quickly – but we need to find the right balance between short and long-term thinking, ideally aligning the two. You can do everything “right,” but if your idea of “right” and “wrong” is with reference to just a few time-scales, you create fragility on the others. These fragilities can amplify and resonate until the entire plane explodes. Technical debt has been covered fairly extensively, and it’s worth reading about and considering its implications in many aspects of life.

Eliminating vs Solving Problems

Of all of the points I’ve made so far, I think this is the most uncomfortable to bring up.

When you’re holding a hammer, everything looks like a nail. If you are being paid to be an engineer, your first instinct will be to engineer everything. In reality, the most ingenious solutions take the form of some upstream change or frame-adjustment or bundling that makes the problem vanish without any work from anybody. In fact, often it means doing less. These decisions are so elegant that they can be invisible, criminally underappreciated. Like black magic they elicit confusion and misunderstanding, they threaten the entire basis of meritocracy.

I can think of one example off the top of my head, a story from a Russell Ackoff lecture about an apartment building with residents complaining about how slow the elevator was. Every day, as they enter and leave the apartment, they dread the wasted time as they rush to get to their appointments. They begged the apartment complex to put in a new elevator. The apartment manager got many bids from contractors, all far exceeding their budget. The situation seemed hopeless.

At some point, an apartment employee with a background in psychology had the idea of installing mirrors in and around the elevator. The complaints over the speed of the elevator vanished. The problem was not elevator speed, the problem was boredom and frustration, making the elevators faster was just one possible solution.

The hierarchical structure of organizations can make these “problem-eliminations” difficult to implement, as generally, when someone brings you a problem (make the elevator fast) they have already determined the problem framing, they’re already invested in the outcome, there’s already sunk cost, and they just want someone to do it. If you owned an elevator replacement company, you’re not in a good position to say “oh, just put in some mirrors” – and you likely wouldn’t be compensated for the contribution, despite considerable value being created. The foundation has already been poured by the context and incentive structures.

In information systems and software, it’s worth considering from first principles the actual experience and needs of your users. Removing features is just as important as adding them when those features are costly to maintain and don’t help or hurt the human-centric experience. For analytics and data work, it’s worth considering which data-points actually matter in decision-making, as simplifying is just as important as developing. This becomes almost impossible when people in (or outside of) an organization strongly identify with and vouch for certain features.

Cruft exists on many levels, and if you are a manager, you can’t expect the people tasked with making and maintaining unneeded things to be able to tell you this while their incentive structure tells them not to and management just wants them to stay busy. Naturally the ideal solution is to redesign these structures, though if that’s out of the budget you can hang mirrors all around the office.

A photo of some raindrops I snapped in the San Juan Islands

Conclusion

That’s all for now! We covered the importance of building on good foundations, having adaptable systems and software, clarifying blurry goals or feedback systems, technical debt (or the tradeoff between timescales in decision-making), and the elimination of problems instead of solving them. I hope this was a useful voice for some of the systemic factors and wicked problems in engineering, R&D, and information systems.